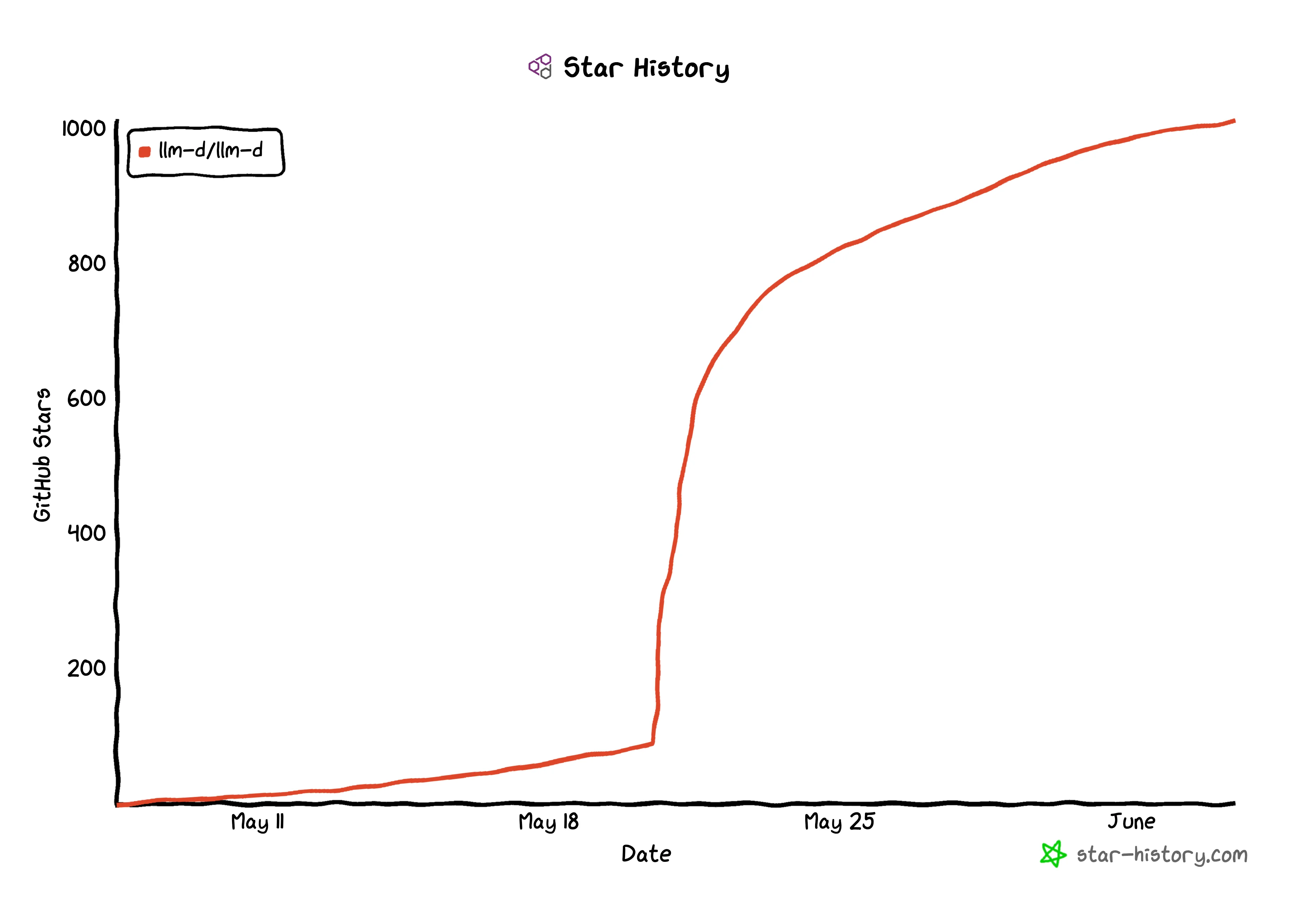

llm-d 0.5: Sustaining Performance at Scale

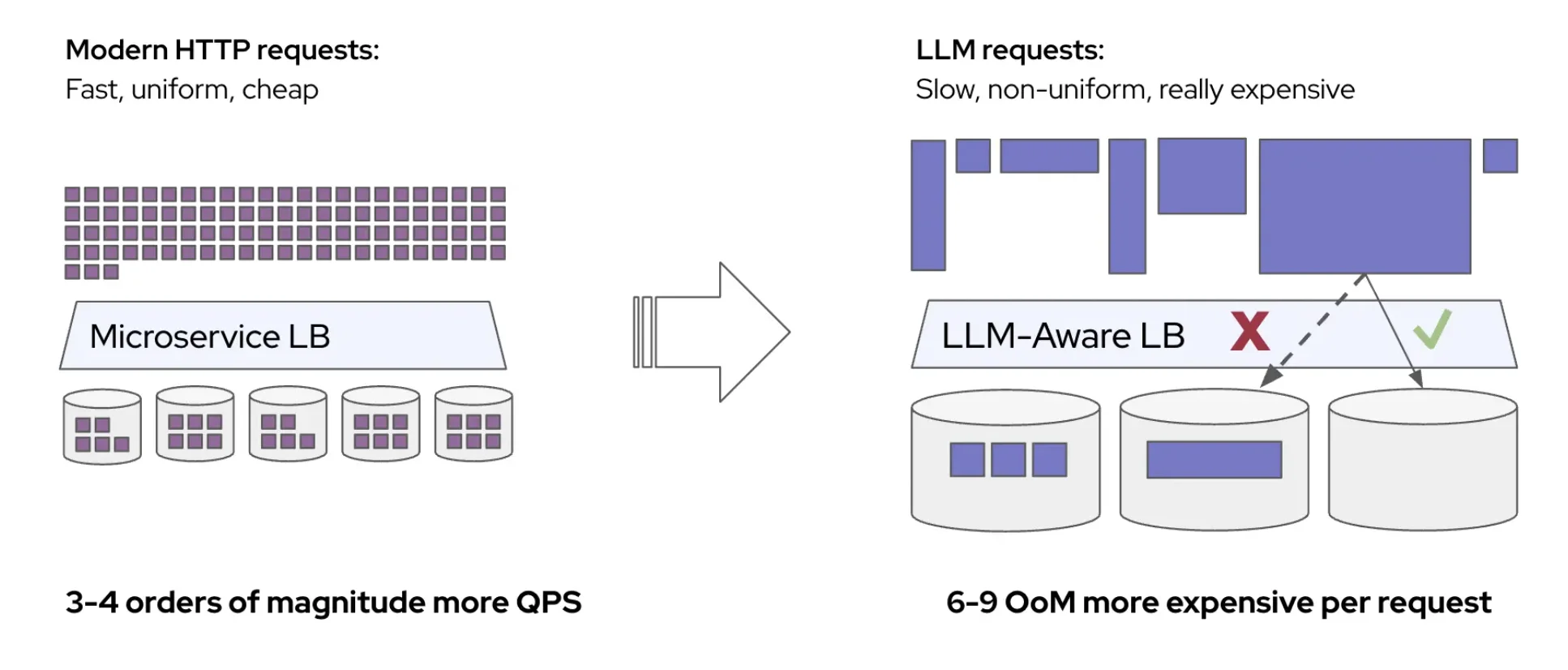

In our previous release (v0.4), we focused on improving the end-to-end latency of production inference, introducing speculative decoding and extending prefill/decode disaggregation across a broader set of accelerator architectures. That work established llm-d’s ability to deliver state-of-the-art latency along the critical serving path. Sustaining low latency increasingly depended on how KV-cache pressure is handled once GPU memory is saturated, whether cached state can be reused across replicas instead of being repeatedly rebuilt, and how requests are routed when workloads mix adapters, models, and availability requirements.

With v0.5, llm-d expands its focus from peak performance to the operational rigor required to sustain performance at scale. This release prioritizes reproducibility, resilience, and cost efficiency, with concrete improvements across the following areas:

- Developer Experience and reproducibility: We have simplified the benchmarking workflow with dedicated, in-guide benchmark support, allowing users to validate each “well-lit path” with a single command.

- Hierarchical KV Offloading: A new storage architecture decouples cache capacity from GPU memory through native CPU and filesystem tiers.

- Advanced Scheduling: Cache-aware routing now supports LoRA adapters and active-active high availability.

- Resilient Networking: A new transport backend (UCCL) improves stability in congested networks.

- Autoscaling Updates: We have introduced scale-to-zero capabilities for cost-efficient intermittent workloads.